Coexisting With AI: Kay Firth-Butterfield on Governing Technology That Shapes Our Lives

Why understanding AI across work, family life and society will define how successfully we live alongside it.

Why understanding AI across work, family life and society will define how successfully we live alongside it.

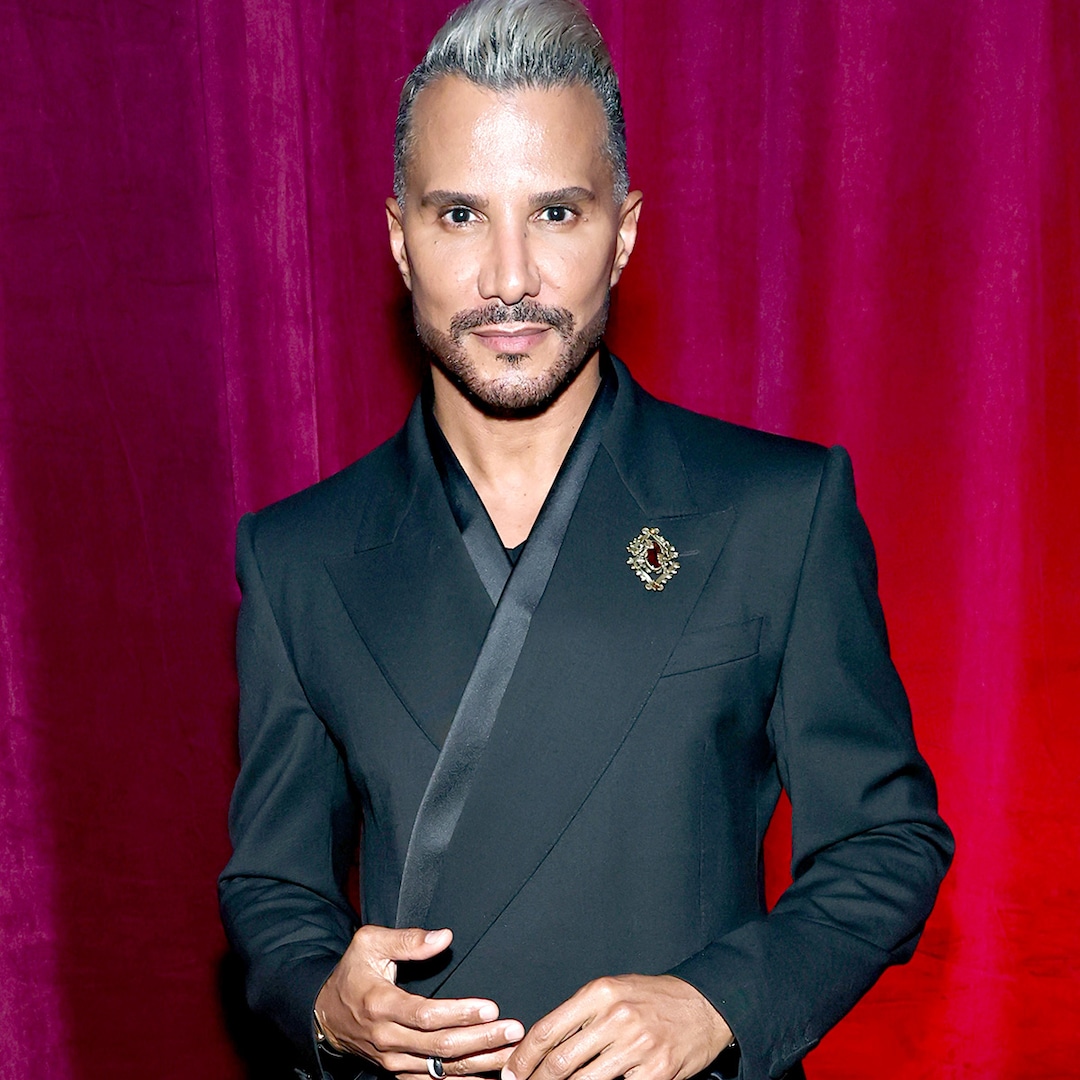

Kay Firth-Butterfield is one of the world’s foremost authorities on artificial intelligence governance, ethics and public policy. Formerly the inaugural Head of AI and Machine Learning at the World Economic Forum, she has advised governments, global institutions and multinational organisations on how AI should be deployed responsibly at scale. Her career spans law, technology and policy, placing her at the centre of global decision-making on AI’s societal impact.

Her expertise continues to be recognised at the highest levels. In 2026, Kay has been selected as a debater at the New York Times celebratory debate in Davos, alongside senior politicians, CEOs and global leaders. She is also due to give evidence to a UK Parliament select committee on AI and law, contributing directly to how future legislation and governance frameworks are shaped.

Her forthcoming book, Coexisting with AI: Work, Love, and Play in a Changing World, brings these perspectives together in a timely and accessible way.

In this exclusive interview with the Champions Speakers Agency, Kay Firth-Butterfield explains why AI must be understood across the full human lifecycle, how individuals and organisations can use it wisely, and why governance, not hype or fear, will determine whether AI becomes a force for shared progress or unintended harm.

“As humans, we also have to make conscious decisions about the climate cost of our use of AI. One of my hopes for the book is that people understand that every prompt uses not just electricity but also around a quarter of a litre of water, and that we begin to ask whether each query is truly worth that cost.” – Kay Firth-Butterfield

Question 1: Your book looks at AI across the full human lifecycle, from childhood through to ageing. Why was it important for you to frame AI not just as a workplace tool, but as something that will shape how we live, learn and relate to one another?

I believe in the power of AI to help humans when we use it wisely. At the moment, across the Global North, people are turning against AI.

They fear it will take their jobs, spread misinformation and reduce our human interaction. Whilst AI is a tool which can and is being used to do those things, it can’t do everything, and if we empower employees, citizens and consumers, we will have a better chance of using AI to achieve gains for ourselves and our future.

The purpose of the book is to enable everyone to understand and participate in the conversations we need to have about our future coexisting with AI.

Question 2: A central message in the book is using AI wisely, whether in business, with children or in everyday life. What does “wise use” actually look like in practice, and where do you see people getting this wrong?

Wise use of AI is my term for using AI responsibly. Most people don’t want to be lectured to act responsibly or ethically; they think they do, but if you point out to a company or person that by putting in guardrails around use of AI they can save costs of problems, they are very willing to act wisely and strategically.

Wise use depends upon what you are using AI for. If it is being used to power lethal autonomous weapons, then ensuring it works perfectly should be at a very high standard. Also ensuring that it is used only for military and not domestic purposes.

In business, ensuring employees understand how often a foundational model hallucinates and how to find it will help prevent that business’s data becoming infected with hallucinations (errors). In all spheres, it is about understanding the good and bad and setting yourself or your employees guardrails which work, and that involves understanding AI, hence the book.

Question 3: You explore the role AI may play in politics, conflict and national security. From a governance perspective, what risks concern you most as these technologies become more powerful and more widely deployed?

- Pervasive surveillance, even in democratic states. I have a piece in the book about how Hitler could have used AI.

- Military-grade autonomous weapons being used in civil policing.

- Choosing to use AI for human leadership jobs when it cannot think, feel or act like a human. Humans should be in charge of the machine.

Question 4: The book does not shy away from the good, the bad and the ugly of AI. Why is it important to hold all three realities in view, rather than focusing only on innovation or only on risk?

AI is a tool which can be used for good or bad and, without understanding, it can also be used badly with the best of intentions. So, whilst it might be a tool which can take notes for doctors, if they don’t check for hallucinations then they might make mistaken diagnoses.

Likewise, in insurance or law, for example, if an employee doesn’t catch a deepfaked document, the claim or legal case could be decided on faked evidence, which would undermine profits and the rule of law. AI has come into our lives and will be in our future, but we haven’t been helped to understand the good, bad and ugly. Now is the time to do so, so we humans shape our future with AI.

Kay Firth-Butterfield’s forthcoming book, Coexisting with AI: Work, Love, and Play in a Changing World, explores these themes in greater depth and is available to download now on Amazon.

The post Coexisting With AI: Kay Firth-Butterfield on Governing Technology That Shapes Our Lives appeared first on European Business & Finance Magazine.